Corporate has decided that we are going to reduce our expenditures and dependence for on-site IT service(s). Many business applications are moving to cloud hosted solutions like Office365, Google suite, data storage is being offloaded to XYZ solution, site-site collaboration is moving to ZYX solution, device management is being done via ABC solution … plus there are many established SaaS solutions like Salesforce, etc. to deal with. These are essential productivity applications with critical and high data transfer impacts.

Despite this trend of transitioning services to the cloud, the networking departments are still responsible for ensuring company-wide sustainable performance and up-time, i.e. insure enough bandwidth, maintain acceptable QoS, create resilient access links, etc. So in other words, 100% of the responsibility and less than 100% of control. Let me raise a few considerations to plan and prepare for.

Consideration 1:

The networking team does their diligence … they segment a few test devices to validate the performance and demands of the various cloud services.

Challenge to #1:

Every organization, every employee have different needs/requirements, not everyone shares large graphic files or manufacturing blueprints or uses Office products or Salesforce. Also, each device family has different cloud access characteristics, as each cloud service needs to be able to push critical updates to each of the user devices!

Consideration 2:

Validate the lessons learned from the test devices across other or multiple locations or sites.

Challenge to #2:

Given that each particular location has a different number of people, different departments with different needs, and different ISP providers (especially for global companies that maintain 100s of locations). How many locations and/or variety of locations is definitive enough?

Consideration 3:

How much bandwidth do I need to define per location or per employee or per application or service? OK, let’s do the “Easy answer” and deploy 10G or 40G links EVERYWHERE!

Challenge to #3:

Cold hard truth … it’s a complicated simultaneous equation with too many variables. Does 10G fiber make sense for the new location recently added to our network via acquisition with only 8 employees? Any reasonable financial prudence would prevent this.

Consideration 4:

The Network Team must find a way to monitor each user, each location, each application, each device, across all time zones in a scalable, non-proprietary manner.

Challenge to #4:

There are a plethora of SaaS monitoring tools, but can any organization really support an Office365 Monitoring solution AND/OR a solution that requires hosted agents in the cloud AND/OR <fill in the blank> SaaS Monitoring solution AND/OR hire the staff to use, configure, and monitor all the various dashboards?

Flowmon addresses this monitoring requirement in a non-intrusive (agentless), technically scalable, and financially friendly OPEX model via our world-class network analytics solution. Let me explain a typical installation that allows immediate value and provides for ultimate scalability as you include new offices or locations.

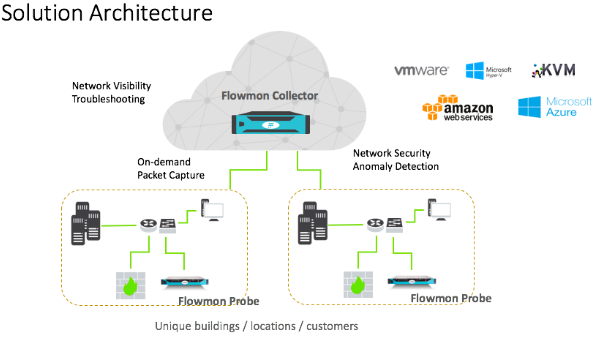

At each location to be monitored, you should deploy our best-in-class scalable probes or network sensors. Flowmon Probes are available in both virtual and physical form factors. Each virtual instance can support up to 2x10G interfaces deployed in VMWare, Hyper-V, or KVM. Each physical instance can support up to form factor can scale up to 2x100G utilizing our proprietary FPGA design guaranteeing performance at line rate ( careful … many vendors claim “100G support” but Flowmon guarantees line rate performance, not just interface support). The Probes send highly enriched IPFIX data with detailed Layer 7 extensions for DNS, DHCP, TLS, VoIP, etc.

The centralized dashboard or “brain” of the solution is the Flowmon Collector. The Collector is also available in virtual and physical form factors, but here lies our scalability advantage. As with the virtual probe, the Flowmon virtual collector supports VMWare, Hyper-V, and KVM but it can also be deployed within AWS or Azure public clouds. This allows the solution to have the proper amount of VM resources needed to accommodate the data streams and can be increased as the solution supports more and more locations! Alternatively, we have high performance physical appliances as needed with RAIDx SATA or SSD data storage to meet the desired performance. The collector receives the IPFIX data from the probes, analyzes the data, creates the dashboards, provides alerting, etc. per location (whether another building or another city or another branch office).

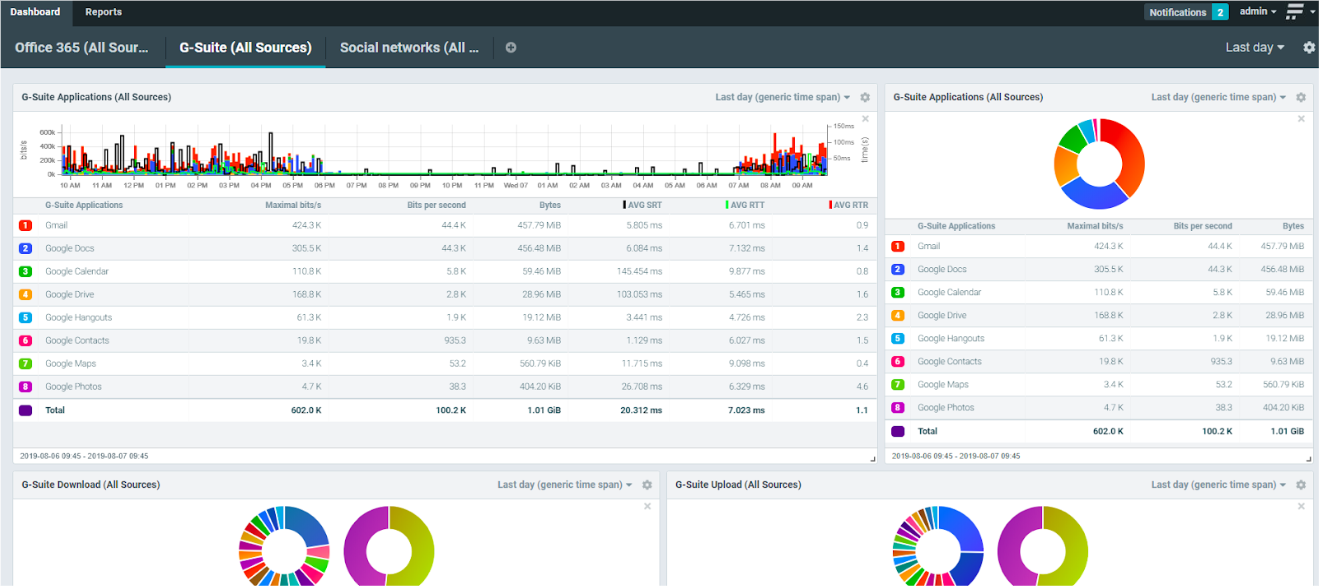

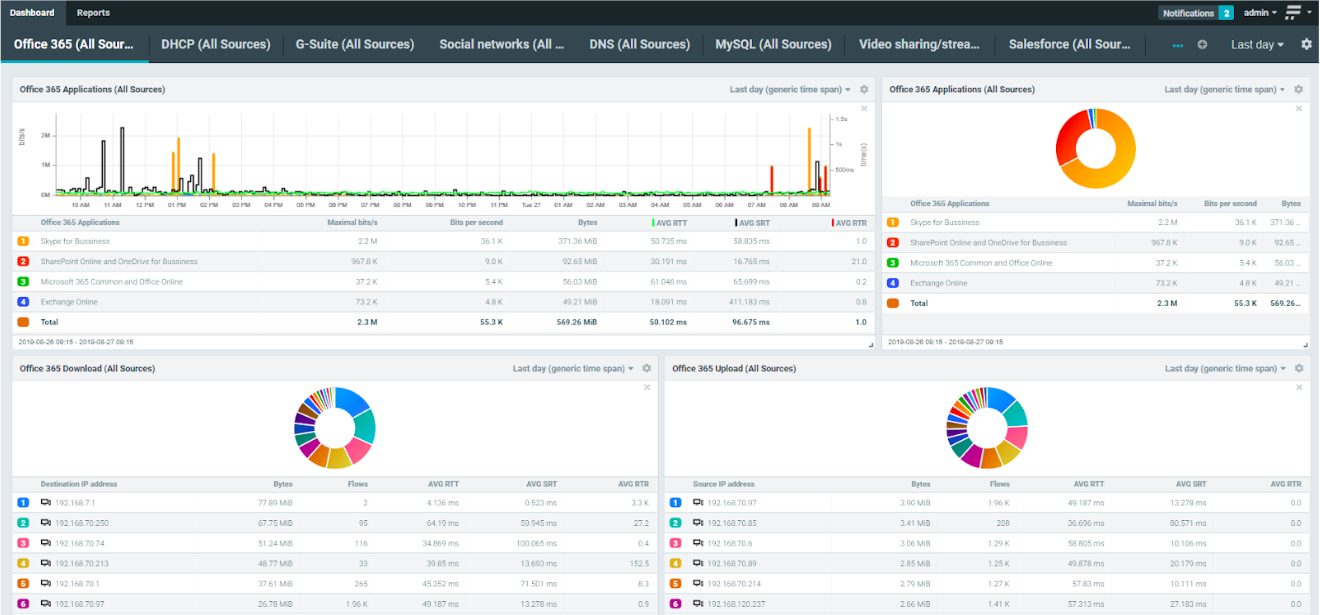

Ready to use dashboard for all common cloud apps from Google services, Office365, MS Teams, File Sharing, Salesforce, etc. are ready to be displayed within minutes of deployment (examples are shown below):

The high-level solution architecture is shown below for only two unique locations but there are effectively no limits to the number of locations.

Whether a global organization or an MSP, Flowmon can provide you with actionable dashboards that allow you to manage your internal data growth or that of your customers as you continue to rely on external services. Interested in more details? We would be happy to discuss how to make this a reality in your network environment. Please click here to get started.

Flowmon Networks, a leader in network visibility, monitoring and security solutions, is keeping up with the ever-increasing complexity of dynamically evolving IT environments AND commercially meeting the needs of its customers.